Zynq UltraScale+ Edge Computing Board: The Preferred Solution for High-Performance Edge Computing

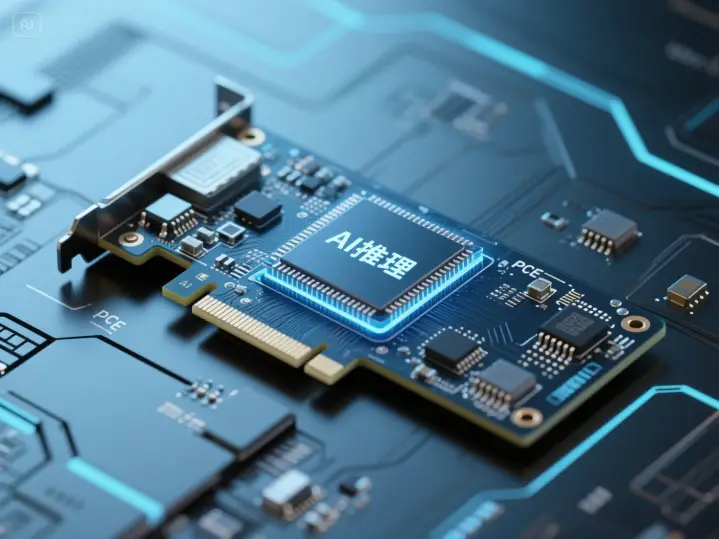

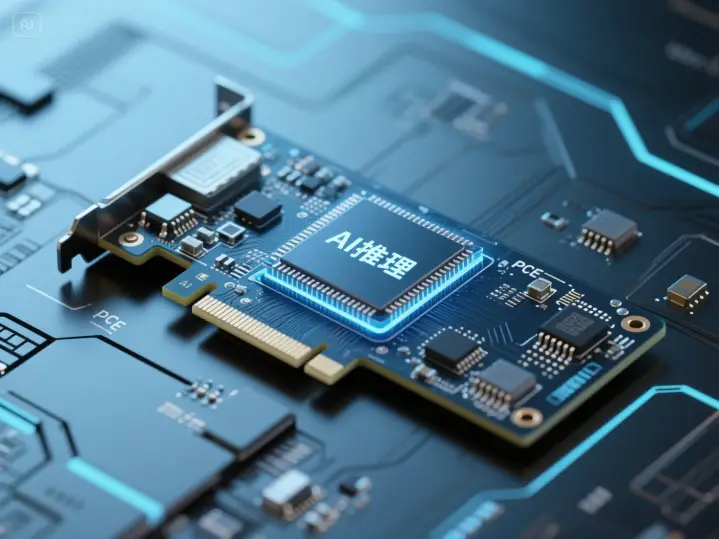

Xuanyu LLM Inference Card: Unlocking a New Chapter in Efficient Large Language Model Inference

RAG Knowledge Base System: Empowering Enterprise Knowledge Management and Accelerating Business Decision-Making

RISC-V + Self-developed DPU Edge Computing Development Board: Unlocking Infinite Possibilities of Edge Computing

In today's rapidly evolving artificial intelligence technology, the application of large language models (LLM) is becoming more and more widespread. From intelligent customer service to content creation, from knowledge Q&A to personalized recommendations, LLM is gradually penetrating all aspects of our lives. However, efficient inference of LLM has always been a technical challenge. The high computing power demand and high energy consumption cost have limited its widespread application. Today, we introduce an innovative product born to solve this problem—Xuanyu LLM Inference Card.

Self-developed LPU, Optimized for Language Models

The core of the Xuanyu LLM Inference Card lies in its self-developed LPU (Language Processing Unit). Unlike general-purpose GPUs, the LPU is deeply optimized for sparse computation, low-precision operations, and attention mechanisms of Transformer models. This proprietary design enables the LPU to demonstrate higher computing power density and energy efficiency when handling LLM inference tasks.

High Performance, Low Power Consumption

In terms of performance, the Xuanyu LLM Inference Card is also impressive. It supports language models with up to 32 billion parameters, and the token throughput rate reaches more than 2,000 per minute, which can meet the needs of large-scale concurrent inference. At the same time, thanks to the efficient architecture design of the LPU, the nominal power consumption of the inference card is only about 120W, which is significantly lower than the 250-300W power consumption level of similar GPU products. This means that under the same computing power, the Xuanyu LLM Inference Card can greatly reduce the energy consumption and heat dissipation pressure of data centers, saving considerable operating costs for operators.

Flexible Expansion, Meeting Large-scale Deployment

For scenarios that require large-scale deployment, the Xuanyu LLM Inference Card is also at ease. It supports multi-chip interconnection, which can achieve near-linear performance expansion to meet the computing power needs of large-scale language model inference. This flexible scalability enables the Xuanyu LLM Inference Card to be suitable for AI applications of various scales, from small start-up teams to large technology companies, all of which can find solutions that suit them.

Seamless Integration, Easy Deployment

In terms of compatibility, the Xuanyu LLM Inference Card also performs quite well. It seamlessly connects with mainstream AI frameworks and middleware such as TensorFlow and PyTorch, allowing developers to easily integrate it into existing AI systems. In addition, Xuanyu also provides rich development documentation and sample code, further lowering the development threshold, so that even beginners can get started quickly.

Wide Application Scenarios, Promoting Industry Transformation

With its high performance, low power consumption, and easy integration, the Xuanyu LLM Inference Card has shown broad application prospects in many industries. In industries such as e-commerce and finance, where customer service inquiries are intensive, the Xuanyu LLM Inference Card can support highly concurrent online intelligent customer service systems, providing a smooth and lag-free conversation experience while greatly reducing operating costs. On live broadcast platforms, it can serve as a real-time interactive assistant, realizing functions such as barrage content review and host speech suggestions, improving the interactivity and user experience of the live broadcast room. In addition, the Xuanyu LLM Inference Card can also be applied to enterprise-level intelligent knowledge base Q&A systems to improve the retrieval efficiency and accuracy of internal documents.

The advent of the Xuanyu LLM Inference Card marks a new stage in efficient inference of large language models. With its self-developed LPU architecture, high performance and low power consumption, and broad application prospects, it provides strong support for the popularization and application of AI technology. In the future, with the continuous development of AI technology, the Xuanyu LLM Inference Card is expected to play its unique value in more fields, promoting the continuous innovation and progress of artificial intelligence technology!

Source: https://www.honganinfo.com/computing-power/inference-chip/